Illustration under a Creative Commons license.

Illustration under a Creative Commons license.

Modality-independent core brain network for language as proved by sign language

Zusammenfassung

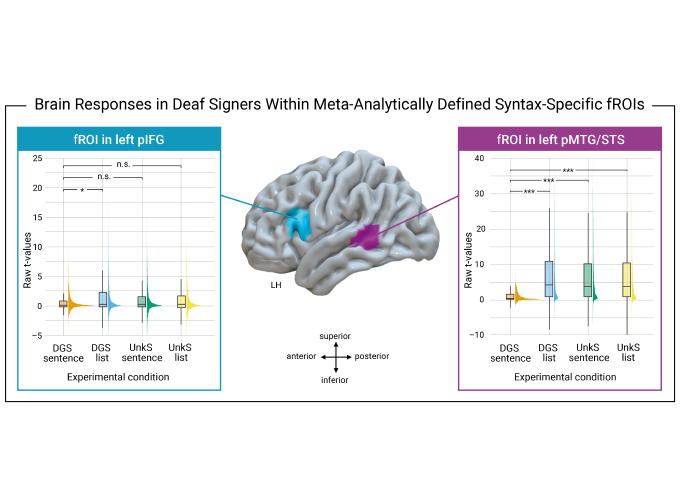

The human brain has the capacity to automatically compute the grammatical relations of words in sentences, be they spoken or written. This species-specific ability for syntax lies at the core of our capacity for language and is primarily subserved by a left-hemispheric fronto-temporal network consisting of the posterior inferior frontal gyrus (pIFG), as well as the posterior middle temporal gyrus and superior temporal sulcus (pMTG/STS). To date, it remains unclear whether this core network for syntactic processing identified for spoken and written language in hearing people also holds for the processing of the grammatical structure of a natural sign language in deaf people. Using functional magnetic resonance imaging, a sign language paradigm that systematically varied the presence of syntactic and lexical-semantic information, and meta-analytically defined functional regions-of-interests derived from a large dataset of syntactic processing in hearing non-signers, we demonstrate that deaf native signers of German Sign Language (DGS) also recruit left pIFG and pMTG/STS for computing grammatical relations in sign language—indicating the universality of the core language network. These findings suggest that the human brain evolved a dedicated neural network for processing the grammatical structure of natural languages independent of language modality, which flexibly interacts with different externalization systems depending on the modality of language use.